As the excitement around AI continues to build, it’s tempting to throw the latest shiny tool at every problem, especially in education. With models like ChatGPT and Gemini dazzling us with their ability to generate fluent, human-like responses, it's natural to ask,

Why not use them to mark student work?

At Graide, we’ve explored this question deeply. And the answer, grounded in both technical insight and ethical responsibility, is clear:

You shouldn’t use LLMs to grade.

LLMs aren’t just smarter autograders; they are something entirely different.

To understand why, it helps to distinguish between two broad categories of AI: classification models and generative models.

Classification models, the kind we champion at Graide, are designed to evaluate. They learn from examples, categorise new data and provide interpretable results. They are trained, tested and implemented to say things like, “This essay demonstrates critical thinking,” or “This response lacks evidence.” You can benchmark them, audit them and, most importantly, you can trust them.

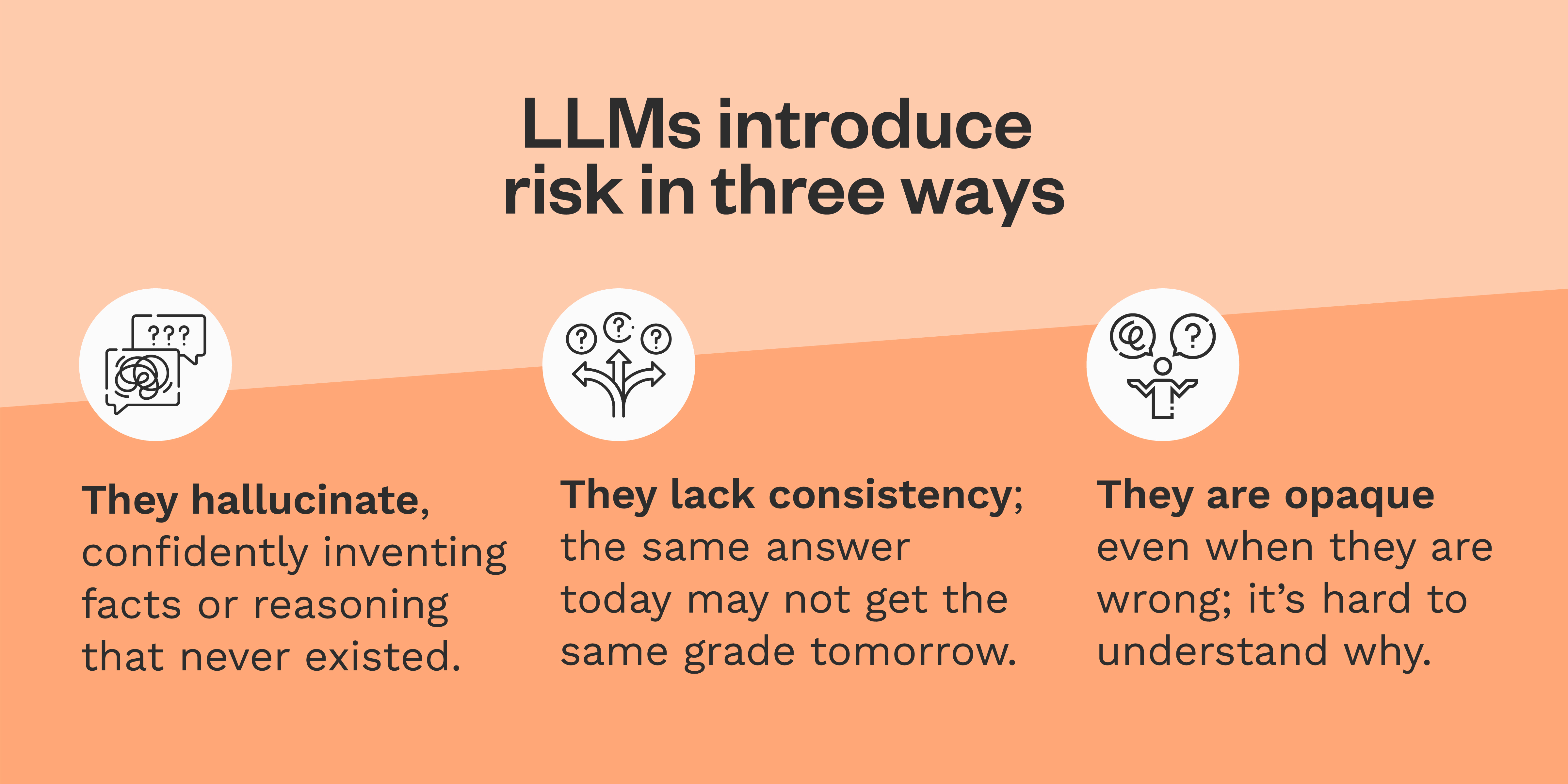

Generative models, on the other hand, are built to create. They’re the poets of the AI world. They are experts in spinning out text, completing sentences and even writing code. But ask them to decide whether a student deserves a B+ or a C, and things start to unravel. Why? Because their inner workings are not grounded in truth but in probability. LLMs tend to fall under this generative AI bucket. They don't "know" what is a fact and what is not. They predict the next likely word based on patterns. And that is where the danger creeps in.

Grading isn’t just a task; it’s a high-stakes, high-risk decision.

Grading is not a mechanical checkbox activity. It's a nuanced process that affects student confidence, educational outcomes and often, future opportunities. Mistakes are not just frustrating but consequential.

Imagine a student appealing their grade, and your only explanation is, “That is what the model said.” That’s not just bad pedagogy. It’s bad governance.

The data they learn from can’t be unlearned.

Another overlooked issue is how these models handle data. When you input student work into an open system like ChatGPT, you are not just analysing it; you are feeding it into a black hole. The model’s privacy policy is clear: what you submit may be used to train future versions. That means sensitive academic content, once submitted, becomes inseparable from the model’s internal logic.

This is the digital equivalent of pouring your private notes into a communal jar of ink; once mixed, you can’t take them back. At Graide, we take this seriously. Our classification systems are trained on your representative data, hosted in secure environments and designed for transparent, explainable outcomes. This is what students, teachers and institutions deserve.

You Can’t Prompt Your Way Out of Risk

It’s tempting to think that clever prompts or layers of human review can tame the risks with LLMs. But when a model is fundamentally unpredictable and unconstrained, no prompt can make it safe for high-stakes assessment. Prompting may finesse the surface, but it doesn’t fix what’s under the hood.

And if we are being honest, education isn’t the place for guesswork.

The Bottom Line

LLMs have a place in education for ideation, tutoring, draughting, and even generating educational content. But grading is different. It requires structure, explainability, fairness and fidelity to learning goals.

That is why at Graide, we don’t use LLMs. We use classification AI, built with guardrails, trained for your context and evaluated like a human marker would be.

See how Graide works

.webp)